DeepSeek vs. OpenAI: A Thoughtful Response to Archerman Capital’s Insights

The Rise of DeepSeek: A Bold New Challenger in the Global AI Race

DeepSeek, a leading AI company in China founded by Zhejiang University alumnus Wenfeng Liang, has emerged as a major player in the global artificial intelligence landscape. Originally the AI research division of the hedge fund Magic Square, DeepSeek has captured international attention with its innovative development model and groundbreaking technologies. Despite challenges such as hardware export restrictions, its highly efficient models have consistently ranked in the global top ten.

A standout achievement is DeepSeek's V3 model, which used only about 2,000 NVIDIA GPUs during training yet outperformed many Western counterparts in efficiency. This exceptional performance has set new benchmarks in the industry. In January 2025, the company launched its inference model, R1, which demonstrated near-parity with OpenAI’s o1 model even without standard fine-tuning, underscoring DeepSeek’s robust R&D capabilities.

DeepSeek’s advancements have earned widespread acclaim in Silicon Valley. Prominent investor Marc Andreessen called its achievements “astonishing.” Meanwhile, Archerman Capital™, a global growth equity firm headquartered in Boston with investment teams in New York and Silicon Valley, has taken keen interest in DeepSeek’s progress. Founded by Dr. Harry Archerman, a Harvard University PhD in applied physics, Archerman Capital™ is renowned for its first-principles approach and research-driven investment strategies. According to SVTR AI Venture Database, its portfolio includes leading AI companies such as Databricks, Scale AI, and Tenstorrent.

Silicon Valley Tech Review (SVTR) directly uploaded the document containing Archerman Capital's views on DeepSeek to both DeepSeek's and OpenAI's large language models. This allowed the models to analyze and generate responses to the insights from the investment firm. Here's how the two companies' AI systems responded:

DeepSeek’s AI Response: Analysis and Evaluation of the Document's Facts and Opinions

The document contains several insights about DeepSeek and its advancements in AI large language models. Below is a detailed analysis of the factual claims and opinions presented:

On Factual Claims

1. DeepSeek Is Not Merely a Derivative of U.S.-Based Models

The document asserts that DeepSeek is not a repackaging or distilled version of American models but is instead based on the Transformer architecture with its own innovations. This claim is credible, as the technical details provided (e.g., MoE, MLA, MTP) demonstrate DeepSeek’s independent R&D efforts. These innovations indicate significant advancements in architecture design and engineering optimization.

2. Core Architecture Built on Transformer with Innovations

Technical specifics such as MoE (Mixture of Experts), MLA (Multi-Level Attention), MTP (Multi-Task Prompting), and CoT (Chain of Thought reasoning) are highlighted as cutting-edge AI technologies. DeepSeek’s optimization in engineering and performance efficiency suggests a strong foundation in state-of-the-art AI methods, particularly in large-model training and inference efficiency.

3. Training Costs Are Not as Advertised ($5.5 Million)

The claim that DeepSeek’s training costs, advertised as $5.5 million, may not be entirely accurate is reasonable. Variations in accounting for pioneering research costs and declining compute costs can lead to discrepancies. This is particularly true in the AI field, where cost metrics often vary depending on assumptions and methodologies.

On Opinions

1. DeepSeek Represents a Victory for Open Source Over Closed Source

The argument that DeepSeek demonstrates the triumph of open-source models has some merit. Open-source projects like Meta’s LLaMA have indeed propelled the AI community forward. As part of this ecosystem, DeepSeek could further accelerate the growth of open-source AI. However, the competition between open and closed-source models is ongoing. Closed-source models, such as OpenAI’s GPT series, still hold an edge in commercialization and cutting-edge exploration.

2. OpenAI’s “Brute Force” Strategy May Widen the Gap in the Future

The document rightly points out that OpenAI’s “brute force” approach—leveraging massive compute and data resources—has yielded impressive results. However, as technology advances, open-source models may close the gap. That said, OpenAI’s established dominance in commercialization and innovation could maintain or even widen the lead, depending on the pace and direction of future advancements.

3. DeepSeek Makes Open-Source Models Comparable to Closed-Source Models

This perspective holds some validity but should be interpreted with caution. While open-source models like DeepSeek have made remarkable strides in performance and efficiency, closed-source models still outperform in commercialization and high-stakes innovation. Open-source models excel in customizability and private deployment, but closed-source models may remain superior in handling complex and nuanced tasks.

4. Foundation Models Will Eventually Become Commoditized

This claim is reasonable. As AI technologies mature, foundation models are likely to become commoditized, shifting the focus of competition to their application in specific scenarios (B2B) and control over user entry points (B2C). This trend aligns with the current trajectory of the AI industry.

5. Demand for Compute Power Will Not Decrease

This viewpoint is valid. As model sizes grow and application scenarios become more complex, demand for compute power is likely to increase. Similar to Jevons Paradox, improvements in efficiency may paradoxically lead to greater overall demand for computational resources.

6. Demand for Data Will Remain High

This claim is also accurate. Data serves as the "fuel" for AI models, and as algorithms become more sophisticated, the need for high-quality data will only grow. This is especially true in handling complex tasks and long-tail scenarios, where data scarcity often presents significant challenges.

Conclusion

Most of the points in the document are well-founded, particularly the analysis of technical details and industry trends. The insights reflect important dynamics in the AI field, such as the evolving competition between open and closed-source models, the increasing demand for compute and data, and the gradual commoditization of foundation models.

However, given the rapid pace of AI development, the competitive landscape may shift dramatically in the coming years. Key issues such as open vs. closed-source competition, resource demands, and model commercialization will remain at the forefront of the AI industry’s evolution.

OpenAI Model's Response: Analysis of DeepSeek’s Technical Features and Market Impact

The document provides insights into DeepSeek’s technological innovations and market influence. Below is a detailed analysis of the main points, segmented into technical and market-level discussions.

Technical Analysis

1. Innovation and Optimization

Claim: DeepSeek employs technologies like Mixture of Experts (MoE) and Multi-Level Attention (MLA) to enhance efficiency and inference capabilities.

Analysis: These techniques are well-supported by academic research, as MoE and MLA have demonstrated significant improvements in scaling large models efficiently. However, their successful implementation requires advanced engineering optimization. DeepSeek’s integration and enhancement of these methods reflect a practical and effective approach to innovation.

2. Training Costs

Claim: DeepSeek has reduced training costs to one-third of the industry standard, and reportedly to 1/20th of OpenAI’s costs.

Analysis: Models developed later can indeed leverage prior learnings and optimizations to reduce costs. However, comparing absolute costs can be misleading due to differences in accounting standards, hardware advancements, and cost structures. Caution is warranted when interpreting these figures.

3. Independence from Supervised Fine-Tuning (SFT)

Claim: Reinforcement Learning (RL) was employed to train the R1-Zero model, emphasizing autonomous reasoning capabilities.

Analysis: RL has proven effective for improving reasoning and strategic decision-making. However, completely forgoing SFT may limit performance on specific tasks that require domain-specific alignment. This approach appears more exploratory in nature than practical for broad application.

Market-Level Analysis

1. Open Source as a Strength

Claim: DeepSeek exemplifies the power of open source and could drive the growth of open-source models.

Analysis: Collaboration in the open-source community has indeed accelerated model improvement. However, the trade-offs between open and closed models in terms of security, quality control, and commercialization must be considered. Open-source ecosystems thrive on adaptability, while closed-source solutions often excel in controlled, high-stakes applications.

2. Compute and Data Requirements

Claim: The need for compute power and data will continue to grow.

Analysis: This aligns with prevailing trends. Compute and data remain critical for scaling models and addressing increasingly complex tasks. As model architectures and applications evolve, the demand for these resources is expected to expand further.

3. Commoditization of Models

Claim: Foundation models will eventually become commoditized, with B2B markets prioritizing productivity and B2C markets focusing on user engagement.

Analysis: This perspective reflects the current trajectory of AI development. As foundational technologies mature, the competitive edge will shift from model capabilities to industry-specific customization and application efficacy.

Conclusion

Most of the points presented in the document are grounded in current academic research and industrial practices, providing a credible and insightful perspective. However, there are areas where caution is warranted:

Training Cost Comparisons: Variations in methodologies and cost components make absolute comparisons unreliable.

Reinforcement Learning Without SFT: While intriguing from a research standpoint, this approach might face limitations in practical deployments that demand task-specific fine-tuning.

Overall, the document offers a balanced and well-informed analysis of DeepSeek’s contributions and challenges, highlighting both its potential and the broader trends shaping the AI industry.

Appendix

Here’s the translated content of the document(Thoughts on DeepSeek) into English:

These days, DeepSeek has been a hot topic. At Archerman Capital, we've conducted research and reflections to share with you. Enjoy! (Feel free to skip the gray areas if technical details are not your focus.)

Some Facts

DeepSeek is neither a shell nor a distilled version of U.S. large models. While some Chinese large models adopt such approaches, DeepSeek does not.

The core architecture is still based on Transformers. DeepSeek introduces innovations and engineering enhancements to optimize efficiency through various methods. These include the Mixture of Experts (MoE), Multi-Head Latent Attention (MLA), Multi-Token Prediction (MTP), Chain of Thought (CoT), and the DualPipe algorithm. Additionally, DeepSeek explores reinforcement learning (RL) without relying on supervised fine-tuning (SFT) for training. Key technical highlights are as follows:

MoE (Mixture of Experts): Divides the model into multiple expert modules for task-specific functions. Training distributes these modules across computing devices for efficiency. During inference, only a subset of experts (37B parameters) is dynamically activated, reducing computational load compared to the full model (671B parameters). DeepSeek addresses imbalanced workloads among experts using natural load balancing and shared expert mechanisms instead of auxiliary loss adjustments.

MLA (Multi-Head Latent Attention): Enhances traditional multi-head attention mechanisms by introducing latent variables, which adjust dynamically to capture diverse implicit semantics. This reduces memory and computational costs during training and decreases KV cache usage during inference.

MTP (Multi-Token Prediction): While typical large language models generate one token per step, DeepSeek predicts multiple tokens simultaneously in specific scenarios, improving contextual coherence and reducing intermediate steps for tasks like math, code, and summarization.

CoT (Chain of Thought): Breaks complex problems into smaller logical steps for training and inference. DeepSeek uses annotated Long CoT data for fine-tuning and rewards optimization during RL, resulting in clearer reasoning paths, multi-path solutions, and breakthrough moments during inference.

DualPipe: Addresses latency issues in traditional training pipelines by enabling a dual-stream design. While waiting for data transfer, computation switches to a different batch, maximizing idle time usage.

R1-Zero: Built on the V3 base model, R1-Zero is trained solely through reinforcement learning without SFT, exploring independent reasoning without human-labeled data. However, R1 still incorporates SFT data to optimize inference quality.

FP8 Mixed Precision Training: Utilizes an FP8 framework, reducing memory usage compared to FP16 while retaining FP16/FP32 precision in certain modules to save resources.

Communication Optimizations: High-efficiency communication kernels optimize bandwidth usage, supporting large-scale deployments.

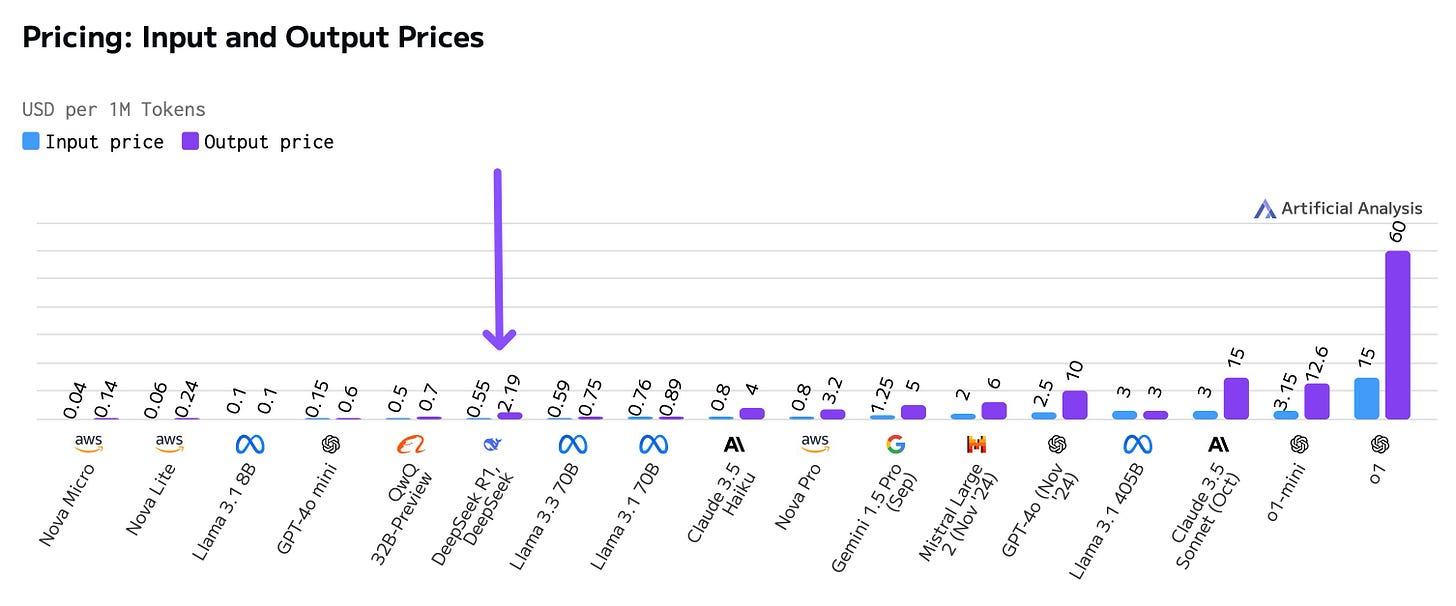

Training cost claims: Reports suggest DeepSeek’s training costs are $5.5 million—1/10 of Meta's and 1/20 of OpenAI's. This comparison is exaggerated. Current pretraining costs for models with hundreds of billions of parameters in the U.S. are under $20 million. DeepSeek achieves one-third of these costs by leveraging existing advancements and avoiding inefficiencies. Additionally, hardware costs have dropped exponentially over the years, making direct cost comparisons less meaningful.

Perspectives

Open-source triumphs over closed-source: DeepSeek represents a victory for open-source, fostering rapid community growth. This foundation will further empower open-source contributions, including Meta's open-source efforts.

Scaling Law and closed-source challenges: While OpenAI’s brute-force scaling approach may seem simplistic, breakthroughs could arise at certain scales, potentially widening the gap between closed- and open-source models.

Increased efficiency reduces proprietary dependency: As open-source models achieve parity with closed-source ones, cost-effectiveness diminishes the need to pay for APIs from providers like OpenAI, encouraging private deployments and customized fine-tuning for downstream applications.

Commoditization of foundational models: In B2B, success hinges on integrating LLMs into complex production workflows to boost productivity. In B2C, traffic entry points will determine value capture.

Demand for computational power remains: The Jevons Paradox illustrates how increased efficiency drives higher overall consumption, evident in past technological cycles like mobile phone adoption.

Data remains critical: Algorithms advance the speed of problem-solving, intensifying the demand for quality data to train and refine these models.

Acknowledgments

During this research, we engaged with several experts in academia and industry. While their names remain undisclosed due to the lack of explicit consent, we extend our gratitude here.

Archerman Capital™ is a U.S.-based growth equity investment firm specializing in AI, data infrastructure, and cybersecurity. Its portfolio includes companies like Databricks, Scale AI, and Tenstorrent. With offices in Boston, New York, and Silicon Valley, the firm employs a research-driven and first-principles approach. This document is purely for sharing purposes and not investment advice.