Beyond Copilot: The 2025 Battle for the “Engineering System”

Why the next moat in AI Coding isn’t the model—it’s the dirty work. Plus: An invitation to a private roundtable with Kuaishou’s Core Tech Team & Artificial Analysis in Palo Alto.

Walk down California Avenue in Palo Alto today, and you’ll notice the conversation has shifted.

Six months ago, the caffeine-fueled debates were all about latency—who could autocomplete a function faster. Today, the smartest Founders, Infra Leads, and VCs have stopped looking at speed and started staring at a much deeper, structural problem:

Now that models are actually good, how do we rebuild the engineering system to handle them?

After spending the last two months talking to dozens of builders across the Bay Area, our conclusion is clear: 2025 is the year AI Coding graduates from a “Copilot” (an assistant) to an “Agentic System” (the primary engineer).

The logic behind this transition is far more brutal—and far more lucrative—than simply “writing code faster.”

I. The Threshold: Crossing the Line of Usability

Why is this happening now?

Bluntly put: The models stopped hallucinating quite so much. With the arrival of the latest reasoning models (like GPT-o, Claude 3.7, and DeepSeek-R1), we’ve seen a steep inflection point in three specific capabilities:

Instruction Following: LLMs can finally respect complex engineering constraints, not just generate a Python script that “looks” correct.

True Long Context: We are no longer treating the context window like a scarce resource. The “Lost in the Middle” phenomenon has been significantly mitigated.

Cross-File Reasoning: This was the biggest hurdle. Models have graduated from understanding a snippet to understanding a repository.

For top-tier founders, the excitement isn’t about code aesthetics. It’s about error rates dropping to a level where autonomy becomes viable. When reliability goes up, end-to-end tasks—automating bug fixes, running unit tests, and passing CI checks—move from science fiction to “table stakes.”

II. The Deep End: The “Dirty Work” is the New Moat

There is a massive misconception in the current market: Many people think AI Coding = An IDE + An LLM API.

They are wrong.

If the model is the brain, the engineering system is the nervous system. The real moat isn’t in the model itself (which is becoming a commodity); it lies in the unsexy, messy “Engineering Dirty Work”:

Context Orchestration: You can’t just dump a repo into a prompt. The magic lies in how you use high-quality embeddings to surgically inject the exact 1% of relevant code into the window.

AST (Abstract Syntax Tree) Mastery: Text generation is dangerous. Unless your agent understands the AST, it cannot guarantee that a refactor is logically safe.

Deep Tooling Integration: An agent that can’t run a linter, parse a CI/CD error log, or trace a compilation failure is just a toy.

The verdict is in: The war won’t be won at the Model layer, but at the Infra layer. Whoever figures out how to elegantly handle this “dirty work” owns the platform.

III. Why Kuaishou? Why KAT-Coder?

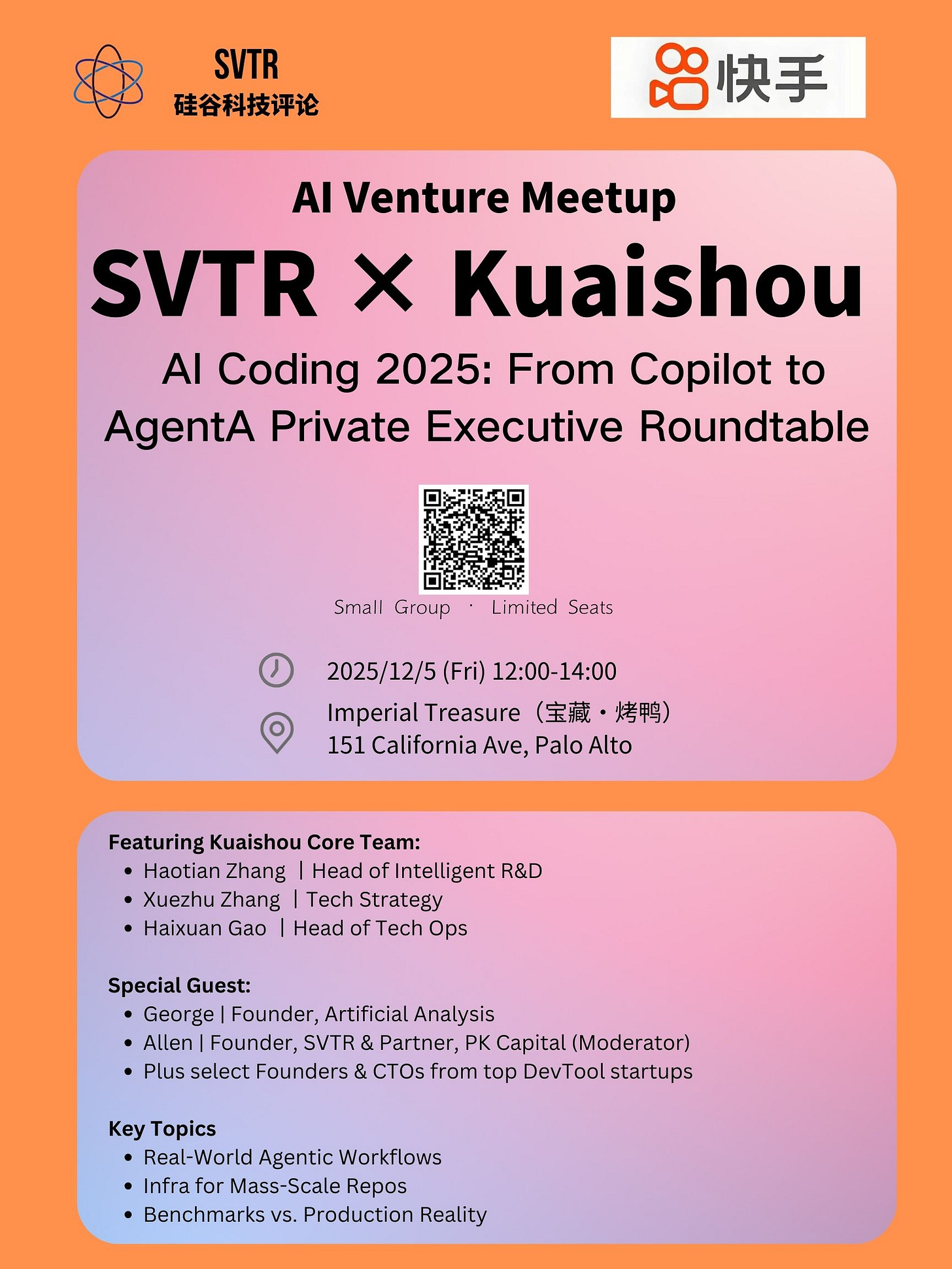

This is why SVTR, in collaboration with Kuaishou (快手), is hosting a closed-door roundtable.

Silicon Valley has no shortage of great models or slick demos. But in the realm of AI Coding, industrial-scale reality is a scarce resource.

While Kuaishou is known globally for high-concurrency video algorithms, their Code LLM team (KAT-Coder) brings a perspective that is rare even in the Valley: They have tested this technology in “Hell Mode.”

We are talking about hundreds of thousands of private repositories, a chaotic mix of legacy languages, strict enterprise CI/CD flows, and a production environment where “hallucination” means “outage.”

On Dec 5, the core team is flying in to share the battle scars:

Haotian Zhang, Head of Intelligent R&D Center

Xuetie Zhang, Tech Strategy & Development

Haixuan Gao, Head of Tech Operations Center

They will be joined by George, Founder of Artificial Analysis, to discuss the discrepancy between public benchmarks and real-world performance.

IV. The Invitation: Palo Alto, Dec 5

On the eve of this system rewrite, we don’t need more broad narratives about “The Future.” We need granular, high-bandwidth engineering discussions.

We are hosting a private lunch at Imperial Treasure (宝藏·烤鸭) in Palo Alto. No conferences, no badges. Just 15 people around a table, good food, and hard truths.

The Agenda:

Model Breakthroughs: Capabilities vs. Benchmarks vs. Reality (feat. Artificial Analysis).

KAT-Coder Roadmap: Performance tuning and inference optimization in massive repos.

Agentic Workflow: How to build tooling that Agents can actually use.

Investment Outlook: The next 12-24 months for AI DevTools.

The decade-long race to rewrite software engineering has just begun.

Join the Discussion

We have very limited seating (15 spots max) to ensure high-quality conversation.

Note: The event is strictly vetted. We prioritize Founders, CTOs, and active practitioners in the AI Coding/Infra space. Confirmation will be sent within 24 hours.